From Pixels to Emotion or Fake it Till You Make It: How AI-Generated Ads Impact Donation Intentions

Relevant topics Research, Archive

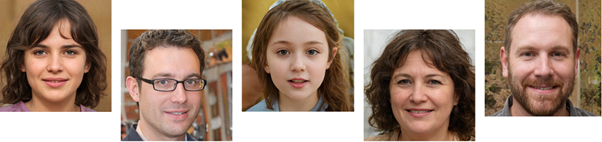

Do you know any of the people in these picture?

Neither do I. Actually, I ‘created’ them using this AI-powered website: https://thispersondoesnotexist.com

These people do not exist. Yet the powerful realism of these faces has the ability to create the belief that they do relate to actual people. This may be a problem in consumers’ perceptions of a brand, especially for the charity sector. And we’ll see why.

Studies have shown there is already a so-called algorithm aversion (Dietvorst, Simmons, and Massey 2015) - a negative bias toward interacting with algorithms in certain scenarios. Synthetic media like deepfakes (around since 2018) are a good example of the potential AI algorithms pertain to deceive and influence public opinion.

(You might remember The Shining deepfake, where the face of Jim Carrey was superimposed onto Jack Nicholson’s character in one particular movie scene). At the same time, Edmond the Belamy, which was marketed as the first painting created by an AI algorithm, sold for $432,500. The algorithm aversion can hardly explain its success.

That was 2019. Less than four years later, we live in a new era (‘post AI’). We do not have enough empirical evidence to demonstrate to what extent synthetic (fake) content in advertising is actually a barrier to building trust between consumers and organisations (both for and not for profit).

What we are becoming more aware of is this: employing AI-generated content as a tool to influence, inform, and predict behavior through data-mining techniques (Davenport et al. 2020) can backfire, particularly in charitable contexts.

AI and Consumer Emotions

Just two months ago, UK Charity Right sparked an intense reddit debate (and subsequent media coverage) by using what it looks like fake images in their advertisements.

The reactions to this campaign shone a strong light on three recent experimental studies conducted by a team of researchers from Australia, Brunei and Finland.

The researchers tested how potential donors reacted to advertising messages featuring content generated by an AI neural network, specifically a GAN (generative adversarial network) trained on a data set of human faces. The emotion displayed in these ads was sadness.

Before we delve into the findings, let’s consider this: why is emotional appeal so fundamental in charitable giving campaigns? Well, human faces, especially of children, represent a strong trigger for emotional response in donors (Cao and Jia 2017). As humans, we are highly visual with the ability to extract information from facial and body expressions (Tsao and Livingstone 2008). This information is the foundation of our emotions and subsequent actions.

When making a decision to donate to a charitable cause, we first put ourselves in the recipients’ shoes - we empathize with them - then we experience moral emotions (such as anger or guilt) that will finally influence our donation intention (Arangoa, Singarajub, and Niininenc 2023).

We are always more inclined to empathize with those closer to us, time or space wise. It’s called psychological distance, a crucial trait of cognitive empathy (Liberman, Trope, and Stephan 2007).

AI and Marketing Implications

These recent experimental studies have proven AI generated images in marketing and advertising campaigns have a negative impact on donation intentions, even when there’s full disclosure of the use of AI-generated content.

It turns out people can’t empathize with synthetic faces of human beings that do not exist. No empathy, no emotion perception, no donation intention. At least for now. As technology evolves and we become more knowledgeable about it, our perception may shift. Projects like the Deepfake Detection Challenge by Kaggle, meant to identify AI-manipulated messaging, will hopefully allow consumers to have enough tools to make fair decisions.

In the case of Charity Right, one comment poignantly signifies the lack of empathy toward synthetic faces:

“I don’t mind AI art, but if you can’t get an actual photo of a hungry child, how can we believe that any donations are actually being used to feed them. It just seems strange to me. Maybe they are a legit charity I dunno.”

If charities decide to employ synthetic images in their ads, the studies show it will be beneficial to make it known or risk losing a positive perception of their organisation. A disclosure statement (i.e. “AI-generated image. Help us protect children’s privacy”) combined with clear transparency of the ethical motives behind the use of the images had the most positive result on donation intention in the experimental studies. Still, not enough to match using real images of real people.

There are only extraordinary circumstances (such as disaster relief, and not educational campaigns) where the use of AI images by charities is considered acceptable by consumers. This is likely to lead to similar outcomes as the use of real images.

Charities, usually on low budgets, find the use of synthetic images quite appealing due to their accessibility. They are cost effective, quality, diversity, and copyright friendly. There may also be ethical reasons to use them in marketing (such as protecting the privacy of a child). Regardless of the motive, the donation intention still fades unless the synthetic image is used as a last resort (in an emergency situation where no alternative seems available).

This conversation between a charity worker and an empathizer, as part of the same Charity Right Reddit thread, is a great example of how this dynamic plays out:

The experimental studies conducted are limited to the use of synthetic images in charitable advertising. They do not relate to videos. More experimental and empirical research is needed to assess the impact of AI on consumer behaviour going forward.

A distinction must also be made between manipulative intent when it comes to not-for-profit organisations using AI-generated images or methods to achieve campaign success.

There are situations where consumers will be able to easily identify the untrue elements of an ad and not hold judgment against the advertisers. This was the case of the “Malaria Must Die” campaign led by a team of scientists, doctors, and activists, which featured a video of David Beckham speaking nine languages. Consumers were likely to know this. The intention of the ad was obviously not to deceive or have financial gain, but to connect with its audience. Hence the public acceptance.

Take Home Notes:

Real, authentic faces have a higher chance of success in advertising campaigns for charities. Adopting false / synthetic advertising campaign creatives is not yet proven to be positively adopted across markets. Whether it’s Amnesty International in Colombia or a well known charity in Canada, the media coverage reflects a negative brand perception in these instances.

Charities are encouraged to carefully consider the pros and cons of using AI-generated images. The acquisition cost might be considerable lower, but donations might take a big hit. It would be advisable to research the audience sentiment before such a campaign is released or wait for empirical surveys at industry level.

If cost and ethics are the drivers to employing synthetic content, charities can gain benefits from disclosure of the reasons, especially the ethical motive behind the decision. Soon, it might become a legal requirement. The AI Act in Europe received significant backing in the European Parliament and is poised to be a key step in global AI regulation. It’s proposed that companies would have to label AI-generated content to prevent AI from being abused to spread falsehoods.

Charities can keep an eye on, and take advantage of, the transformations currently taking place in marketing and communications, as AI is set to enhance advertising models. Since SnapChat released its “My AI” chatbot in February this year, 150 million users have sent over 10 billion messages to their new AI friends. That allows Snap to better understand their users, which means better ad targeting. There’s a lot of negative feedback around “My AI”. Yet it’s worth noting that this model can bypass the web user tracking ban and the Apple privacy limitations. These AI chat bots are not useful just for improving the AI model. The interactions with customers are a gold mine of data for tailored advertising strategies.

We’ve come a long way since “Blue Jeans and Bloody Tears” - one of the first songs created by an AI algorithm in 2019. Starting this year, AI music contributions will be allowed for award nominations at The Grammys (provided evidence of substantial human involvement in the creation of the song is proven).